Time to try posting something more substantial. Here is one prepared earlier.

This article discusses the merits and otherwise of comparison sorting algorithms in general use based on timings and comparison count data. It was found that performance of a fully optimized quicksort degraded when faced with so called median of three killer data compared with random ordered data but not to the extent that might have been expected. Mergesort was able to sort data with this configuration substantially faster than random data out performing all other algorithms tested and was only marginally slower than quicksort on random data. As noted elsewhere mergesort has an advantage over quicksort when there is any relationship between the key used to obtain an existing ordering and the required key and it is suggested that its additional space requirement is unlikely to be a problem where memory is not at an absolute premium. Mergesort sorts a 1,024,000 item list with a median of three killer configuration in less than 1/3 the time taken by a library function apparently engineered to deal with this type of data and appears to be a median of three killer-Killer.

Comparison Sorting: The State of the Art

One of the problems I have set myself currently is to implement an algorithm to comparison sort a list of random ordered data faster than quicksort. That difficulty comes from a Delphi Magazine article [1] where I suggested that it could be done. This blog reports findings from the article and examines relative performance of quicksort and mergesort to prepare for a look at sorting algorithms written in Java. You never know, something might just drop out.

The main points of the Delphi article were that memory usage was no longer the critical factor it had once been for general sorting on PCs and workstations and that mergesort was the algorithm of choice for sorting certain large data sets. Naturally the scenario under consideration related to Delphi where heap management did not include compaction and the machine stack provided a low overhead location for a buffer, mostly irrelevant to Java but not so the other findings. A library class TList came with a Sort method that accepted a comparison function and used a concise but unoptimized version of quicksort. TList exposes its underlying dynamically allocated array as a read property so that any sorting method can be applied to it.

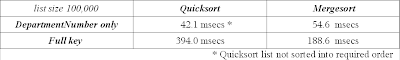

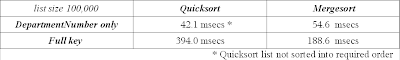

Mergesort beats quicksort even when carrying the full key . . .

The article quoted a case where a list in StaffNumber within Name order was extracted from a database and sorted - StaffNumber within Name within DepartmentNumber. Numbers were integers and Name was a single-word string. Actual surnames from the UK were used, occurrence weighted based on some token occurrence data. As a stable sort, mergesort could re-sort the list on a single key. Around an 80% sort time reduction was found: mergesort on DepartmentNumber only vs. quicksort on the full key.

An interesting point that came to light was that mergesort still showed better performance even when the full key was used by both algorithms. As conjectured at the time and since confirmed, mergesort's stable characteristic reduced the number of times nested keys were accessed to decide a comparison. Timings for these unoptimized sorting algorithms running on a current desktop machine, maximum department size 500, are:

Mergesort can beat quicksort hands down for many sorting tasks but for a data set with existing ordering unrelated to the required key quicksort has the edge.

Mergesort vs. Quicksort

Mergesort and quicksort are the current algorithms of choice for in-memory comparison sorting. They both divide the list to be sorted into sublists and this approach makes adaption for multiprocessing relatively simple. Quicksort, devised by C.A.R. (Tony) Hoare [2] uses relocation of elements above or below a partition value, initially for the complete list and subsequently for each partitioned sublist. Mergersort, sometimes said to be based on an idea proposed by John von Neumann, uses repeated binary division of the list into sublists, sorts small sublists and merges adjacent sorted sublist pairs.

Quicksort works in place and requires little additional storage but is not stable: relative locations of same key elements are modified. Stable variants of quicksort need storage areas outside the list and using a buffer smaller than the complete list will likely get worse performance than mergesort. Mergesort is inherently stable but an implementation that provides efficiency approximating to quicksort needs a buffer to store items during a merge. Merging with minimum element relocations and therefore best efficiency requires a buffer half the size of the complete list.

Quicksort Nemeses

Quicksort has an average time complexity of O(n log n): on average it will take 2n ln(n) comparisons to sort a list. But, if the heuristic for choosing a partition value or 'pivot' is to use the first element of the list, basic quicksort will use minimum (n2 – n) / 2 comparisons to sort a reverse order list or to check that an in-order list does not need any elements moving. This is O( n2 ) 'quadratic' complexity, characteristic of the most basic sorting algorithms. Sorting 10000 elements in reverse order takes around 49,995,000 comparisons as opposed to the 184,207 average. An obvious solution is not to use the first element. Why not the middle element for example? The problem with setting the partition value in this way is that there will always be some list arrangements that get quadratic complexity.

Various schemes have been proposed to select a good pivot, including random selection. The more complex a selection scheme the higher its overhead is likely to be and it is hard if not impossible to show that a particular scheme will get complete immunity for all possible input configurations. Median of three selection carries a low-overhead and appears to get superior partitioning for a wide range of list configurations [3]. Three elements are selected, generally the first middle and last element, compared and/or sorted. The median supplies the partition value. The quicksort that I need to beat in the Faster Than Quicksort game uses median of three engineered to get an average overhead of less than one comparison per sublist.

In 1997 David Musser presented an analysis [4] that showed there were a significant number of list configurations that would drive a median of three selection quicksort quadratic: 'Median of Three Killers'. His remedy was to make the sorting algorithm monitor its recursion depth introspectively and if a preset depth was exceeded revert to a guaranteed O(n log n) sort, heapsort or perhaps mergesort. The dual mode algorithm was to be called 'introsort'. More recently it has been suggested that engineered median of three killers could be used to bring down a web-server in a denial of service attack. The current C++ STL sort method is an introsort implementation that reverts to heapsort making it easy to include an example of this approach in a test application, maybe. Ralph Unden [5] has posted a Java class that outputs lists of median of three killer integer values and his paper provides further explanation of the problem with a minimum of mathematical analysis.

Relative Performance

Time taken to sort a list results from the data item comparisons and assignments used together with the amount of supporting processing carried out. Supporting processing includes computation of factors relating to the current list, updating indices, function calls and so on. Counting comparisons etc. gets a platform independent indication of performance but doing this can only be indicative. Suppose that swapping two items is done using a register as a temporary location for example, does this count as two assignments or three? Processing will take about 2/3 of the time needed to swap the items with one item stored temporarily in a buffer. Changes to the ratio of comparison cost to assignment cost and the effect of CPU cache misses are other factors.

The method preferred here is to show actual timings taken under the rules of the Faster Than Quicksort game, which I think give a realistic assessment of relative performance at least for native code on the PC. These rules are simple and designed to make tests relate to working with records and objects rather than lists of numbers. The dynamic is different because even basic comparisons take substantially longer than assignments.

The rules are: Sorting is to be done using a comparison function so that complex keys can substituted. The list holds references to data objects with at least a 32 byte memory footprint so that substantial portions of the in memory arrangement are not covered by a single CPU cache line. Object memory location must not have any sequential relationship with sorted list location. Armed with the timings we can then try to find out what went wrong using comparison counts and other statistics.

Algorithm Timings

Table 1 shows timings for sorting random order lists on a single integer key in milliseconds rounded to 3 decimal places. The rel. columns are performance relative to the fastest (tail call optimized) tcoQuicksort figures in the first column. The test machine is a fairly typical desktop: dual core Pentium® at 3.3GHz, 2GB RAM. For each list size, sortings for 50 different lists, 25 generated using a Random() function and reversing the order of the list, are timed. Each timing uses an initial pass which is discarded and between 5 and 10 timed passes, fewer passes being used for larger lists (no worries, the test application does all this not me). The figure shown is the average but the maximum and minimum times were also recorded, any marked discrepancy leading to retesting to confirm or reject the initial timing.

Results were pretty much as expected. On random data all the sorting methods do considerably better than O(n log2 n) would suggest. No doubt other list configurations would restore the balance. The penalty for using full recursion over tail call optimization is quite small maximizing at 9% for a 4000 item list.

Stable quicksort with its huge list-size buffer finally creeps ahead of its rivals for the largest list. It seems likely that this effect results from separate location of items equal to the partition element by this implementation, excluding them from the sublists passed to the next level. Random generation puts a proportion of records with equal keys in all the lists and for a large list their number may become significant. The maximum key value used is size – 1, hopefully getting a similar proportion of same keys for all list sizes. An in-place quicksort can be implemented to provide separate location and exclusion [6] but some additional overhead is inevitable.

The timings shown are probably a bit unfair on mergesort. Currently the problem is not lack of merge optimizations but may be the assembly language block-move routine used to deal with out of order sublists, still the same as for the Delphi Magazine article. It was always quite crude but changes to CPU architecture since P III have made it even less suitable. Java sort functions use mergesort with a list sized buffer and it will be interesting to see whether performance can be improved using current optimizations and a half-size buffer.

The data for unoptimized TList.Sort shows that optimization is always worthwhile for random data particularly for smaller list sizes. Processing an in-order or reverse order list, Sort fairs better because the partition element is not swapped out to reduce partitioned elements by one. The effect of leaving the partition element in place, a design decision made in [6], needs further investigation.

Timings for the C++ STL sort() function are not shown. It was clearly going to be impossible to get sort() from the current version of the STL to compile on the author's system without far more work than time available permitted, if at all. Data obtained using sort() from the template library supplied with Borland Developer Studio 2006 was so far out of kilter with other timings that it was felt that some effect other than the quality of the algorithm must be responsible. This is a pity because a comparison of timed performance on median of three killer lists would have been informative. As a substitute numbers of calls to the comparison function provide a stand in.

Median of Three Killers

Results are show in Table 2. The four left-hand columns show calls for lists of random data. Comparisons for median of three killer configurations are on the right.

Killer configurations have an adverse effect on tcoQuicksort and TList.Sort. The quicksort used by TList.Sort shows almost quadratic behaviour, a bit unfair seeing the implementation tested uses a middle element pivot not median of three. The effect on tcoQuicksort approximately doubles the number of comparisons needed to sort a list of the same size. The fully recursive version suffers the same fate: its implementation is identical except for the tail call optimization. Taking list size to the next level, sorting a list with 2,048,000 elements tcoQuicksort uses 47,299,055 comparisons on random data and 106,537,288 comparisons on a killer sequence. The ratio remains approximately constant and is not quadratic. Mergesort and the library sort() use fewer comparisons on a killer configuration than on random data, mergesort drastically so. Actual timings showed mergesort sorting the list on an integer key in better than 1/3 the time taken by the library function, tallying with the ratio of comparisons used, and this margin is of course even more substantial for a complex key.

Conclusions

Quicksort provides fast sorting of randomly ordered data but can be affected adversely by certain data configurations. Optimizations may contain this problem for certain configurations or aggravate it for others. For the reason given tcoQuicksort uses around 66 million comparisons to sort a two million item list in reverse order as opposed to about 40 million used by TList.Sort.

Mergesort is a better all-rounder and can sort data ordered with an existing relationship to the required key faster than quicksort. Apparently it is also a Median of Three Killer-Killer! It may be possible to improve performance of the implementation tested here by writing a better block move routine but, because items located at opposite ends of the list to that required are moved late, mergesort can never surpass quicksort for sorting random ordered data.

It seems likely that best proofing against adverse data combined with best sorting speed can be achieved using a tail call optimized quicksort that checks for out of balance partitioning after the initial call and subsequently. An immediate switch to mergesort would reap the benefit of its superior efficiency on killer and other adverse configurations. A mergesort buffer can be allocated on the machine stack at any time during processing: a buffer only relates to a single recursion level and space beyond the stack pointer can be used by the merge routine on the way back to the root of the merge tree. The allocation overhead is minimal, ideally only a matter of checking stack headroom. When there is insufficient headroom to to allocate an optimum size buffer an alternative merging routine that uses available space can be called. If a language does not provide intrinsics for efficient stack allocation perhaps it should do so.

The downside of using two algorithms is that code size is increased. It may be that double sorting time on some data configurations for large lists is acceptable using an optimized quicksort. Alternatively random selection of median of three elements might provide a simple alternative. A local randomization scheme need not be elaborate, say setting the initial seed using a value from the current machine clock and a linear congruential generator on a smallish prime. This approach needs further investigation.

References:

[1] Martin Humby. Buffered Sorting and Stack Buffers. Delphi Magazine Issue 113: January 2005.

[2] C. A. R. Hoare, Quicksort. Computer Journal Vol 5 (1): 10-15. 1962.

[3] Robert Sedgewick, Implementing Quicksort Programs, Communications of the ACM Vol 21(10) 847–857 October 1978.

[4] David R Musser, Introspective Sorting and Selection Algorithms, Computer Science Department, Rensselaer Polytechnic Institute, Troy, NY 12180, U.S.A. 1997.

[5] Ralph Unden, > here < and Implementierung und Untersuchung des introspektiven Sortieralgorithmus Introsort, Berufsakademie Stuttgart - Fachrichtung Informationstechnik, student paper April 2007

[6] Jon L Bently, M Douglas McIlroy: Engineering a Sort Function. Software – Practice and Experience, Vol. 32(11), 1249-1265 November 1993.